I don't think 30 seconds is a bad number at all for 1.4M queries! I'd have actually anticipated this to be much slower. Imagine what you are doing: You are requesting the data from PHP, a high-level language not really meant for data processing. Dividing your 30 seconds by the 1.4M queries means your Pi-hole is only using 21 µs (yes, micro not milli) per query. This is actually very very fast.

But I still see your point and server-side pagination is definitely something we already have implemented and tested for the next generation of Pi-hole (where it is written in c and compiled to native machine code for maximum speed). However, you'll also understand that we will not backport this into PHP for Pi-hole v5.x.

It all comes down to saying: Don't do this  Don't query a year worth of domains. If you want to do research spanning large time windows, I recommend to interact directly with the long-term database.

Don't query a year worth of domains. If you want to do research spanning large time windows, I recommend to interact directly with the long-term database.

For instance,

sqlite3 /etc/pihole/pihole-FTL.db "SELECT(*) FROM queries WHERE domain = 'google.com' AND client = '192.168.0.1';"

will compute the number of times google.com has been requested by 192.168.0.1 within your long-term data. This query will be both faster and much much less memory consuming than doing something like this trough the Pi-hole frontend.

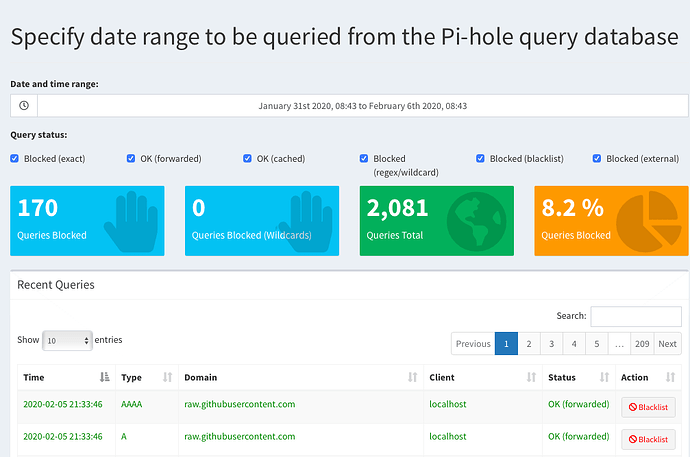

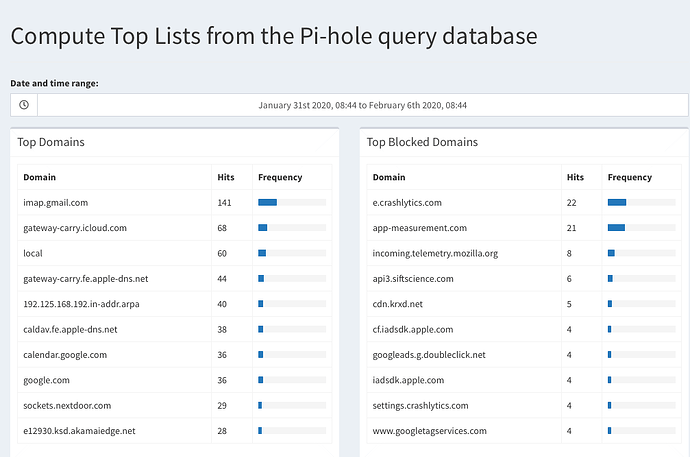

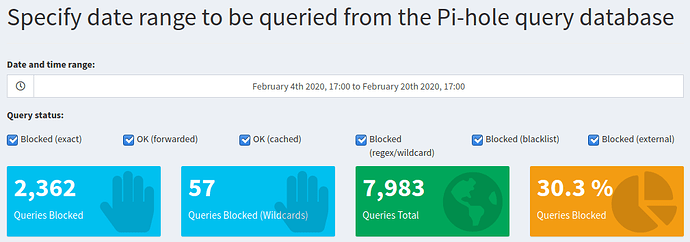

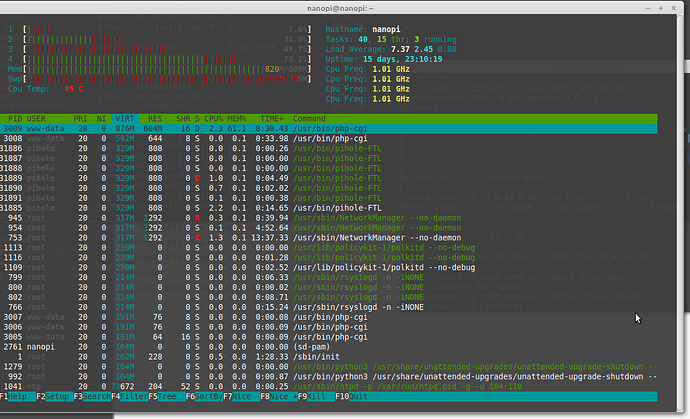

Well, 442000 queries. That's quite a lot. Assume the average domain is 20 bytes in length, then there is the timestamp, the client IP, the reply type and delay, etc. easily making 100 bytes for each result row.

While this is already a quite conservative estimate, it already goes up to 442000*100 Bytes = (roughly) 442 Megabytes. Add the bookkeeping data PHP needs to build the result array on top. Furthermore, there is also the SQL interface querying this data which needs to have memory for itself so it can process the requests with the all the filtering by time and maybe status. There isn't much we can do about this raw amount of data.

FTL can handle even much larger amounts of queries, however, that's because it uses a clever 8are you allowed to say this about your own thing?) compression/memory-optimization algorithm that ensures not only minimal overhead but also reduced needs for memory when a given string (like a domain name) comes up several times. With PHP, we cannot do this.

I don't think that this has changed from v4.x -> v5.0

There isn't anything we can do about this as this is given due to PHP works. PHP and SQLite aren't able to parallelize this task.

Well, not really. The browser merely sees an error 500 being returned from the server when PHP is killed due to memory exhaustion. The error message that gets logged by PHP into /var/log/lighttpd/error.log is not available to the client.

Just printing the message

"Error: Not enough RAM allocated to PHP"

because we assume that this may be the reason can easily be misleading, only imagine users did modifications to their local source code and the error 500 is actually caused by a syntax error.

However, I see two possible solutions here:

-

Extend the text we show

An unknown error occurred with loading the data

to something like

An unknown error occurred with loading the data.

Check the server's log files (/var/log/lighttpd/error.log when you're using the default Pi-hole web server) for details. You may need to increase the memory available for Pi-hole in case you requested a lot of data.

-

While it is not possible to catch fatal errors (as they are fatal and not simple exceptions), it is actually possible to implement our own error handling routines which could handle this kind of error. However, this is somewhat lower-level and should be explored for v5.1

I'm assigning myself to this topic to remember myself to have a look.