I have 34 setup currently. I use the pihole-updatelists tool and looks like it’s grown a bit over the last year or so.

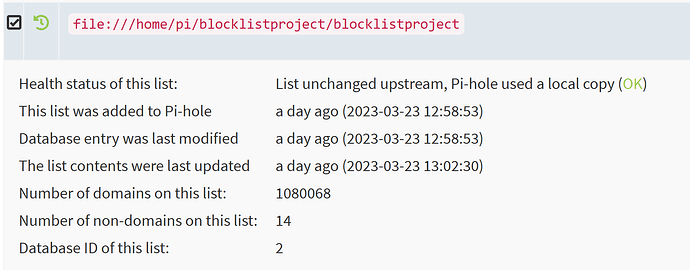

I do have app. 120 lists, app. 70-80 are active, some are local, the 2 top scorer with more than1 mio I have currently disabled due to the described effect.

This reduced the total amount of entries from 5.7 to 3.7 mio.

The pihole -g command with the described new setup (without the 2 big lists) runs fine in 20-30 mins.

I'm seeing the same hanging behaviour with the latest update. The adlist update which took place in the terminal after the update took noticeably longer. I've just added the oisd big list (which is in ABP format and says it's compatible with Pi-hole 5.16+) just to test performance and done another gravity update from the web admin page, and it's got part way through and hung. The terminal cursor isn't even blinking and DNS queries are unavailable.

$ dig example.com

; <<>> DiG 9.10.6 <<>> example.com

;; global options: +cmd

;; connection timed out; no servers could be reached

Edit: it's responding very intermittently after several minutes and has a warning which I've never seen before on this Pi 3B+

Type: LOAD

Message: Long-term load (15min avg) larger than number of processors: **7.7 > 4**

This may slow down DNS resolution and can cause bottlenecks.

I suspect something in the new ABP format parsing is the cause. I'll post more later if it recovers as I have atop running which will have captured the process usage around the gravity update.

Edit: visually inspected Pi, the SD card green light is on solid, indicating heavy I/O. It's normally off and blinks very intermittently in normal use.

Pi4 Model B - I have approx. 2.5 mio. entries on 6 adlists.

The update took a little longer, but not significant.

BRG

Thomas

The Gravity update took approx 30 mins (it normally takes 3 minutes) but has left things in a messed up state. Lots of errors like this (the debug log with -c doesn't see any problems however?):

[2023-03-24 08:04:22.409 561/T627] Error while trying to close database: database is locked

[2023-03-24 08:07:01.172 561/T626] WARNING: Storing devices in network table failed: database is locked

[2023-03-24 08:08:01.226 561/T626] ERROR: SQL query "END TRANSACTION" failed: database is locked (SQLITE_BUSY)

atop reveals that the gravity update maxed out the memory and swap space on the card, with the virtual memory manager maxing out the cpus.

atop kswapd0 maxed out, 3x 10 min blocks looked similar to this

ATOP - pihole 2023/03/24 07:30:38 ---------------- 10m0s elapsed

PRC | sys 9m59s | user 34.92s | #proc 182 | #trun 2 | #tslpi 220 | #tslpu 16 | #zombie 0 | clones 822 | | #exit 725 |

CPU | sys 100% | user 6% | irq 1% | idle 28% | wait 265% | guest 0% | ipc 0.86 | cycl 342MHz | avgf 1.40GHz | avgscal 99% |

cpu | sys 48% | user 1% | irq 0% | idle 7% | cpu001 w 44% | guest 0% | ipc 0.89 | cycl 601MHz | avgf 1.40GHz | avgscal 99% |

cpu | sys 46% | user 2% | irq 0% | idle 8% | cpu002 w 44% | guest 0% | ipc 0.88 | cycl 616MHz | avgf 1.40GHz | avgscal 99% |

cpu | sys 3% | user 2% | irq 0% | idle 6% | cpu003 w 89% | guest 0% | ipc 0.67 | cycl 90MHz | avgf 1.40GHz | avgscal 99% |

cpu | sys 3% | user 1% | irq 0% | idle 8% | cpu000 w 89% | guest 0% | ipc 0.68 | cycl 61MHz | avgf 1.40GHz | avgscal 99% |

CPL | avg1 18.87 | avg5 15.15 | avg15 7.77 | | | csw 762934 | intr 4981056 | | | numcpu 4 |

MEM | tot 909.6M | free 17.3M | cache 15.2M | dirty 0.0M | buff 0.2M | slab 50.7M | shrss 9.4M | vmbal 0.0M | zfarc 0.0M | hptot 0.0M |

SWP | tot 100.0M | free 0.1M | | | swcac 2.1M | | | | vmcom 2.5G | vmlim 554.8M |

PAG | scan 10962e4 | steal 5691e3 | stall 0 | | | | | | swin 359 | swout 25081 |

DSK | mmcblk0 | busy 94% | read 133515 | write 1015 | KiB/r 82 | KiB/w 158 | MBr/s 17.9 | MBw/s 0.3 | avq 18.58 | avio 4.16 ms |

NET | transport | tcpi 543 | tcpo 530 | udpi 102 | udpo 95 | tcpao 19 | tcppo 0 | tcprs 0 | tcpie 0 | udpie 138 |

NET | network | ipi 1144 | ipo 633 | ipfrw 0 | deliv 1144 | | | | icmpi 4 | icmpo 4 |

NET | eth0 ---- | pcki 1669 | pcko 614 | sp 0 Mbps | si 16 Kbps | so 0 Kbps | erri 0 | erro 0 | drpi 0 | drpo 0 |

NET | lo ---- | pcki 38 | pcko 38 | sp 0 Mbps | si 0 Kbps | so 0 Kbps | erri 0 | erro 0 | drpi 0 | drpo 0 |

PID SYSCPU USRCPU RDELAY VGROW RGROW RDDSK WRDSK RUID EUID ST EXC THR S CPUNR CPU CMD 1/21

55 8m54s 0.00s 0.49s 0K 0K 0K 0K root root -- - 1 R 1 90% kswapd0

Debug log is https://tricorder.pi-hole.net/qusN2nfN/

I can send the atop data file to tricorder if you want to inspect it and there's a way to send a 1MB binary data file there.

We are busy trying to recreate and analyse the circumstances that would favour your observation.

Obviously, some of us were able to use those two lists on their installations without issues, making it tricky to recreate.

So far, it would seem that both the size of a single blocklist as well as the overall number of blocklists and maybe their order would be contributing to sluggish gravity update performance. Also, this is more likely to be triggered on systems with very low memory (<512M).

How much memory does your system have?

1GB RAM and 1GB paging file, pi 3B+

Thank you.

So you've got around 80 active lists totalling to 3.7M entries on a 1GB RAM system.

This may also be interesting to know from other users.

I'll add some lists to my Pi-hole and see how a 256M NanoPi handles them.

lemme know if u need Tricorder or more infos ....

NanoPI NEO2, 1GB, 1GB swap-file

Just one block list (Hagezi Ultimate, 2.203.168 hosts)

It's really huge difference: from aprox. 1 min processing time (v5.21) to unresponsive system (v5.22) that is still is unresponsive for > 15 minutes and not finished.

Based on viewing htop, seems a "grep -Fv" (do not remember well) while processing blocklist is causing the problems...

Very glad you are looking into it, thanks.

![]()

That seems the culprit !

raspbery pi 3B 1Gb, 32Gb SD card, unbound, redis, knot-resolver, grafana, webmin, chrony, mailutils (exim & dovecot).

uname -a: Linux raspberrypi 6.1.19-v7+ #1637 SMP Tue Mar 14 11:04:52 GMT 2023 armv7l GNU/Linux

free -mh (while gravity is processing lists)

total used free shared buff/cache available

Mem: 921Mi 183Mi 403Mi 2.0Mi 334Mi 678Mi

Swap: 255Mi 168Mi 87Mi

free -mh (when gravity is finished)

total used free shared buff/cache available

Mem: 921Mi 166Mi 156Mi 3.0Mi 599Mi 695Mi

Swap: 255Mi 164Mi 91Mi

60 lists, 3.051.262 entries, 2.368.767 unique entries, using two lists with ABP-style entries:

real 7m28.728s

user 4m52.872s

sys 0m32.030s

As you can possibly see, I've upped my SWAP (a long time ago), default 100Mb, to 256Mb.

The duration of pihole -g hasn't changed, compared to earlier versions of pi-hole.

Please check for a stray temporary gravity db:

ls -lah /etc/pihole/gravity.db_temp

If that file would exist, absolutely make sure you remove it before you try to update gravity again.

sudo rm /etc/pihole/gravity.db_temp

And could you please check your /tmp folder for potential left-overs?

sudo du -h /tmp/tmp.*

If any, you should probably remove them, but better share the output so we can confirm they belong to Pi-hole and not some other process.

As others already have mentioned:

The issue does not arise with relatively small block list(s), regardless of their number, but with processing big lists. So it probably is not the number of lists but the size of the list that causes the problems.

So, I guess there will be, dependent on the system's free memory available, a maximum size the new Pi-hole version can process without choking.

Here’s the requested output on my Pi zero2:

pi@pi0-2:~$ ls -lah /etc/pihole/gravity.db_temp

-rw-r--r-- 1 root root 92K Mar 22 20:25 /etc/pihole/gravity.db_temp

pi@pi0-2:~$ sudo rm /etc/pihole/gravity.db_temp

pi@pi0-2:~$ sudo du -h /tmp/tmp.*

20M /tmp/tmp.YmNyBatjyH

8.6M /tmp/tmp.eDQ5iNVvJp.gravity

pi@pi0-2:~$

I’m guessing the gravity tmp files doesn’t need to be there but I’ll wait for advice or instruction before deleting.

Those look like Pi-hole files - it should be safe to remove them.

Indeed, the issue seems at least partially related to /tmp memory consumption, and as suspected, a large number of lists may add to this just as well as a single one of extreme size (with a combination of them possibly being the worst).

I'm experimenting for some counter-measures, but will also notify development about this.

We all have daytime jobs, so don't expect a fix by the hour, but know that we are at it. ![]()