@jpgpi250 I've compiled Unbound now, just one question... I have to create the /etc/unbound/unbound.conf.d/ directory myself right? It's not there after compiling and starting the service...?

Yes. I took the directory structure, used by the Raspbian version of unbound, even though that version is very old. All the files come from that installation (saved them and reused them). The only file that has a personal touch is /etc/unbound/unbound.conf.d/unbound.conf

Thanks, looks like it's working! ![]()

So far so good, this is the current version of /etc/knot-resolver/kresd.conf

-- vim:syntax=lua:set ts=4 sw=4:

-- Refer to manual: http://knot-resolver.readthedocs.org/en/stable/daemon.html#configuration

-- Network interface configuration: see kresd.systemd(7)

-- For DNS-over-HTTPS and web management when using http module

-- modules.load('http')

-- http.config({

-- cert = '/etc/knot-resolver/mycert.crt',

-- key = '/etc/knot-resolver/mykey.key',

-- tls = true,

-- })

-- To disable DNSSEC validation, uncomment the following line (not recommended)

-- trust_anchors.remove('.')

-- Load useful modules

modules = {

'hints > iterate', -- Load /etc/hosts and allow custom root hints

'stats', -- Track internal statistics

'predict', -- Prefetch expiring/frequent records

}

-- Cache size

cache.size = 100 * MB

modules.load('prefill')

prefill.config({

['.'] = {

url = 'https://www.internic.net/domain/root.zone',

ca_file = '/etc/ssl/certs/ca-certificates.crt',

interval = 86400 -- seconds

}

})

hints.root_file = '/usr/share/dns/root.hints'

hints.root({

['i.root-servers.net.'] = { '2001:7fe::53', '192.36.148.17' }

})

trust_anchors.add_file('/usr/share/dns/root.key')

The entries in syslog, when started:

May 11 12:42:09 raspberrypi systemd[1]: Starting Knot Resolver daemon...

May 11 12:42:09 raspberrypi kresd[16606]: [ ta ] warning: overriding previously set trust anchors for .

May 11 12:42:09 raspberrypi systemd[1]: Started Knot Resolver daemon.

May 11 12:42:09 raspberrypi kresd[16606]: [prefill] root zone file valid for 09 hours 40 minutes, reusing data from disk

May 11 12:42:10 raspberrypi kresd[16606]: [prefill] root zone successfully parsed, import started

May 11 12:42:10 raspberrypi kresd[16606]: [prefill] root zone refresh in 09 hours 40 minutes

May 11 12:42:10 raspberrypi kresd[16606]: [ta_update] refreshing TA for .

May 11 12:42:12 raspberrypi kresd[16606]: [ta_update] key: 20326 state: Valid

May 11 12:42:12 raspberrypi kresd[16606]: [ta_update] next refresh for . in 12 hours

NOT sure what the warning means...

Not sure what they mean either, is it because of the prefill or that you are auto-maintaining the trust anchors? I think the config looks good though! You didn't get that weird name resolution error either when prefilling, have you installed pi-hole yet?

I'm changing the configuration while pihole is up and running, When knot-resolver is down, unbound does the name resolution.

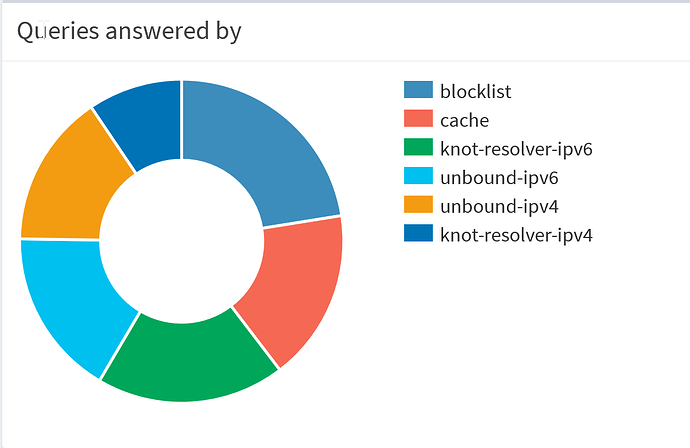

The stats now look like this (up and running for about 4 hours, 2250 queries, 500 blocked):

Nice! I'm going to copy this setup if you don't mind... ![]()

I didn't get very far today, had to do family stuff. Maybe tomorrow... ![]()

I'm up and running with the dual setup now, just have to switch over the entire network to the new pi-hole. ![]()

Do you think maybe we should level the playing field and set this in kresd.conf?

cache.min_ttl(3600)

cache.max_ttl(86400)

I really don't think optimizing the cache on knot-resolver and unbound is beneficial. Unbound and knot-resolver (when running them both) have only 1 client, namely pihole-FTL.

Pihole-FTL has it's own cache, refer the entry in /etc/dnsmasq.d/01-pihole.conf cache-size=10000. Personally, I've changed this to cache-size=65535, giving pihole-FTL a bigger cache, still not sure this is beneficial because pihole-FTL says, when starting: cache size greater than 10000 may cause performance issues, and is unlikely to be useful.

All your devices send their DNS queries to pihole-FTL and are thus using the cache of pihole-FTL. pihole-FTL will use the cache to answer the query, OR the blocklist, OR forward the query to the preferred resolver.

The preferred resolver is chosen by pihole-FTL by sending the query to all the configured resolvers (server= entries in /etc/dnsmasq.d config files) every once and a while. The dnsmasq sources (/home/pi/dnsmasq/dnsmasq-2.80rc1/src/config.h) have the following entries:

#define FORWARD_TEST 50 /* try all servers every 50 queries */

#define FORWARD_TIME 20 /* or 20 seconds */

edit

I have both dnsmasq (disabled) and pihole-FTL (enabled - running) installed on my system. When pihole-FTL pisses me of, I disable pihole-FTL and enable dnsmasq to verify I get the same results. The above values are the dnsmasq values, I have no idea what the values are, used by pihole-FTL.

/edit

The only time you would use the cache of knot-resolver or unbound would be when you enter commands (on your pihole) like:

unbound (my IP and port configuration):

dig @127.10.10.2 -p 5552 +dnssec www.raspberrypi.org

dig @fdaa:bbcc:ddee:2::5552 -p 5552 +dnssec www.raspberrypi.org

knot-resolver (my IP and port configuration):

dig @127.10.10.5 -p 5555 +dnssec www.raspberrypi.org

dig @fdaa:bbcc:ddee:2::5555 -p 5555 +dnssec www.raspberrypi.org

These are the command I use to verify the resolvers (unbound and knot-resolver) are still alive and responsive.

But pihole-FTL's cache like Dnsmasq doesn't do any prefetching or prediction right? It probably only caches the entry according to the TTL set by the domain? The cache hit ratio on my Pi-hole isn't that great and I feel like the cache of Unbound/Knot helps a lot...

As you can see in the graph, a few entries earlier, the cache on my pihole is used (red), it currently is at 19.2% (you can see the value when you hover over the graph in the admin dashboard).

The knot-resolver and unbound, using the configurations I listed earlier, will always have the root zone in the cache, so no problem there, that is also the reason why I didn't disable the cache all together.

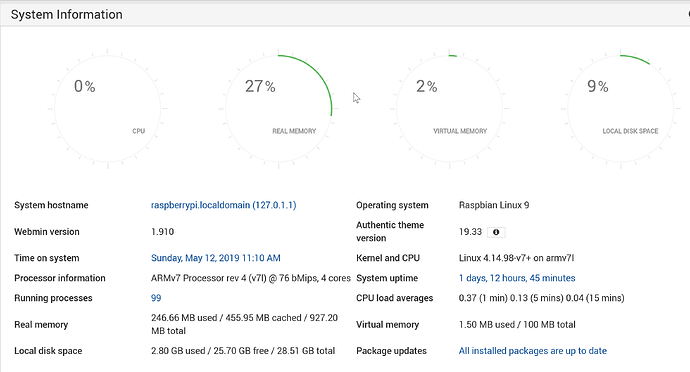

I assume optimizing the cache for both unbound and knot-resolver does no harm. You can use the settings you listed earlier. The only thing you have to watch out for is memory usage. In your config, you increased the cache to 200Mb. You need to ensure your pi has enough memory to keep everything running without swapping. To verify this, I have installed webmin, witch has a useful stats page.

The instructions to install webmin can be found in my pi installation manual here, chapter 4, section 11 & 12.

In the end, all that count is the user experience. If you feel like the resolver(s) aren't responsive enough, you should consider further optimizations. If it feels OK, only security entries would improve your setup, such as hiding the version and identity (still looking for that, both unbound and knot-resolver, not yet found...)

The real problem: How are you going to measure your changed (cache) setting have a real impact? I have no answer to that...

I still think you will get a better experience letting Unbound/Knot do some heavy caching, prefetching popular domains. Just like if you would use Cloudflare for example, they cache so your experience will be better.

About the memory use, I always thought I could trust the memory use percentage on the Pi-hole dashboard which usually is pretty low?

OK (I assume optimizing the cache for both unbound and knot-resolver does no harm).

My questions:

- You are changing the cache settings, what are the defaults (when nothing is specified)?

- What are the recommendations for specific environments (few users, large company, …)?

- How are you going to measure your changed (cache) setting have a real impact?

The information is limited (load, temp, memory usage) and doesn't refresh frequently enough to notice short increases).

Answer to myself, after running a short test

- stop the resolver you want to test.

- start the resolver you want to test, the cache will be empty)

- run the following script:

#!/bin/bash

# test IPv4 knot-resolver

SECONDS=0

for domain in `cat /home/pi/domains.txt`

do

dig @127.10.10.5 -p 5555 +dnssec $domain

done

duration=$SECONDS

echo "$(($duration / 60)) minutes and $(($duration % 60)) seconds elapsed."

you can download the list (domains.txt) here. The zip file contains a program to test DNS on windows. Using this would NOT be a good test, as you would be using the pihole-FTL cache and, possibly all available resolvers (unbound & knot-resolver)

By running the script on your pi, you ensure all requests are processed by the same resolver. Remember, however, this does NOT only test the performance of your resolver, It will also be highly dependant of the response time of the DNS servers that answer the queries.

edit

results running the script without the modified cache parameters (restarted the knot-resolver):

7 minutes and 48 seconds elapsed.

result running the script with the modified cache parameters (restarted the knot-resolver with the new configuration):

7 minutes and 49 seconds elapsed.

result running the script with the modified cache parameter again (did NOT restart the knot-resolver, so the cache is still populated)

7 minutes and 47 seconds elapsed.

My conclusion: Either my test method sucks OR the cache parameters you proposed don't have any effect.

/edit

edit2

OR the pi is the bottleneck and cannot process the script any faster than this.

the question thus remains: How are you going to measure your changed (cache) setting have a real impact?

/edit2

edit3

I ran the same test on the unbound resolver, using the earlier mentioned configuration. Stopped and started the unbound resolver, clearing the cache, before running the test. result:

7 minutes and 55 seconds elapsed.

/edit3

I don't think running those lists will show you much about the effectivness of the cache. The first time it's run it's not in the cache anyway and even if you run it a second time it depends on the TTL of the entry in the cache. At least Knot caches and prefetches popular domain names depending on how often they are used and gives them a higher TTL in the cache depending on how often it's been requested. At least that's how I understood it. It doesn't just cache an entry forever just because it's been used once (although unbound is configured with a minimum ttl of 3600). I have noticed with Knot that it takes a few days to notice the difference. I don't care much about measuring exact numbers, as long as it works well and I'm happy. It will take time to fine tune and it's all about real world results and not lists.![]()

Edit: I think what I'm trying to say is, it's all about having the right domains cached at the right time when they are needed. That is probably whats makes the most difference for me anyway.

Thanks, I already have prefetch on but I will try and add serve-expired! ![]()

I think it might be similar to Knot's:

'serve_stale < cache',

...or maybe not. That might just be if the server is not responding...

Yes indeed, thanks for confirming what I was too lazy to lookup. ![]() And thanks for the comparisons with Unbound, much appreciated!

And thanks for the comparisons with Unbound, much appreciated!

A small cache is slightly faster, though it's unlikely that you will notice the difference without heavily stressing your Pi-hole. The main reason for this warning is to point towards the extra memory requirements. Whilst this may not be an issue on modern SBC systems with a Gigabyte of RAM, there are also smaller boards out there and with, e.g., 256 MB for the entire system, you may run into a bottleneck situation much faster.

There is a cache detail display on the settings page that will show you the cache size, the number of cache insertions and evictions. As long as the evictions are zero (or close to zero at least), your cache is large enough (could obviously be too large). Domains that run out of their TTL naturally make room for new queries so most environments shouldn't need a cache larger than maybe 1000.

I cannot speak for knot-resolver, but at least in the case of unbound, the extra cache is meaningful. The reason for this is that pihole-FTL only stores a few domain records, most notably A, AAAA, and CNAMEs. These details are only stored for FQDN. Unbound, however, traverses the recursive resolver path and will store data from the root to a requested subdomain in its cache. It can later reuse parts of its knowledge (e.g., about the .com domain). As pihole-FTL doesn't do any recursive resolving, this will effectively reduce the number of queries unbound has to make for new domains. And yes, also the pretechting may save you some milliseconds at one or the other place.

I don't think optimizing the cache is a thing that could be done easily, they are already pretty tuned. Messing around with the TTL (especially possibly overriding a minimum value) can actually cause malfunctions, e.g., if the DNS record in fact changes frequently.

We use much less strict testing parameters as sending queries to all servers at least every 20 seconds seems a bit aggressive and also conflicts with our idea of optimizing privacy (don't send everything to all enabled DNS servers). See here for the constants used by pihole-FTL:

Thank you for this clear explanation.

As you can see in the above published configurations, both knot-resolver and unbound have the necessary configuration options to store a copy of the root zone locally. I assume that this implies the information for the .com domain only is retrieved when the resolvers (unbound and knot-resolver) refresh that information.

Nice to know. I always changed the values in the dnsmasq source, before compiling it, as the values can not be changed at runtime. Any idea why Simon choose these aggressive values?

So then what's your opinion on the Unbound guide in the Pi-Hole docs, which sets min and max TTL in the config? Should that be removed?