Versions

- Pi-hole: v5.3.1 (Latest: v5.3.1)

- AdminLTE: v5.5 (Latest: v5.5)

- FTL: v5.8 (Latest: v5.8)

Platform

- OS and version: Debian GNU/Linux 10 (buster)

- Platform: Raspberry Pi 4 8Gb with Docker

Expected behavior

I don't want to have a saturation of hits on permitted domains.

Actual behavior / bug

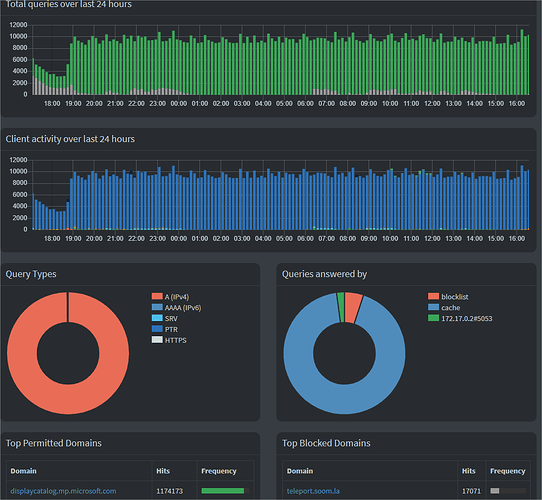

After I installed the latest version of PiHole on my raspberry pi 4 8Gb with Docker, I have a weird behavior on the permitted domains.

I can see multiple call per seconds of this domain displaycatalog.mp.microsoft.com.

Initialy the call was initiate by my computer with the IP : 192.168.0.23 (but I don"t know why because I don't have open Windows Store) and after that it's look like a loop has started...

Steps to reproduce

Steps to reproduce the behavior:

- Go to the Dashboard

- Scroll down to : Top Permitted Domains

- See error

Screenshots

Additional context

A side of Pihole I have my own dns-over-https docker container that I referenced in pihole.

This is the script that I launch with supervisorctl :

$ cat pihole.sh

#!/bin/bash

# https://github.com/pi-hole/docker-pi-hole/blob/master/README.md

_term() {

echo "Caught SIGTERM signal!"

kill -TERM "$child"

}

trap _term SIGTERM

docker stop pihole || true

docker rm -f pihole || true

docker pull pihole/pihole:latest || true

eth0_ip=$(ip address show dev eth0 | grep -E -o "inet [0-9.]+" | grep -E -o "[0-9.]+")

echo $eth0_ip

docker run --rm\

--name pihole \

-e INTERFACE="eth0" \

--hostname=piholesrv \

-e TZ="Europe/Paris" \

-e ServerIP="${eth0_ip}" \

--shm-size=256m \

-e WEBPASSWORD="Ch4ngeMeP1ease!"\

-v "/opt/piholesrv/pihole/pihole/:/etc/pihole/" \

-v "/opt/piholesrv/pihole/dnsmasq.d/:/etc/dnsmasq.d/" \

-v "/opt/piholesrv/pihole/log/:/var/log/" \

-p 127.0.0.1:8080:80 \

-p 53:53/tcp \

-p 53:53/udp \

--ip 172.17.0.3 \

--cap-add NET_ADMIN \

--cap-add=SYS_NICE \

--dns=127.0.0.1 --dns=1.1.1.1 \

pihole/pihole:latest &

child=$!

wait "$child"

docker stop pihole

I have -p 127.0.0.1:8080:80 because I'm using Caddy as a reverse proxy in frontend.

Moreover, in the PiHole logs I can see multiple time those entries :

Apr 18 14:00:00 dnsmasq[513]: query[A] displaycatalog.mp.microsoft.com from 172.17.0.1

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog.mp.microsoft.com is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog-rp.md.mp.microsoft.com.akadns.net is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog-rp-europe.md.mp.microsoft.com.akadns.net is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached consumerrp-displaycatalog-aks2eap-europe.md.mp.microsoft.com.akadns.net is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog-europeeap.md.mp.microsoft.com.akadns.net is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached db5eap.displaycatalog.md.mp.microsoft.com.akadns.net is 52.155.217.156

Apr 18 14:00:00 dnsmasq[513]: query[A] displaycatalog.mp.microsoft.com from 172.17.0.1

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog.mp.microsoft.com is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog-rp.md.mp.microsoft.com.akadns.net is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog-rp-europe.md.mp.microsoft.com.akadns.net is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached consumerrp-displaycatalog-aks2eap-europe.md.mp.microsoft.com.akadns.net is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog-europeeap.md.mp.microsoft.com.akadns.net is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached db5eap.displaycatalog.md.mp.microsoft.com.akadns.net is 52.155.217.156

Apr 18 14:00:00 dnsmasq[513]: query[A] displaycatalog.mp.microsoft.com from 172.17.0.1

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog.mp.microsoft.com is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog-rp.md.mp.microsoft.com.akadns.net is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog-rp-europe.md.mp.microsoft.com.akadns.net is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached consumerrp-displaycatalog-aks2eap-europe.md.mp.microsoft.com.akadns.net is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog-europeeap.md.mp.microsoft.com.akadns.net is <CNAME>

Apr 18 14:00:00 dnsmasq[513]: cached db5eap.displaycatalog.md.mp.microsoft.com.akadns.net is 52.155.217.156

Apr 18 14:00:00 dnsmasq[513]: query[A] displaycatalog.mp.microsoft.com from 172.17.0.1

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog.mp.microsoft.com is (null)

Apr 18 14:00:00 dnsmasq[513]: config error is REFUSED

Apr 18 14:00:00 dnsmasq[513]: query[A] displaycatalog.mp.microsoft.com from 172.17.0.1

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog.mp.microsoft.com is (null)

Apr 18 14:00:00 dnsmasq[513]: config error is REFUSED

Apr 18 14:00:00 dnsmasq[513]: query[A] displaycatalog.mp.microsoft.com from 172.17.0.1

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog.mp.microsoft.com is (null)

Apr 18 14:00:00 dnsmasq[513]: config error is REFUSED

Apr 18 14:00:00 dnsmasq[513]: query[A] displaycatalog.mp.microsoft.com from 172.17.0.1

Apr 18 14:00:00 dnsmasq[513]: cached displaycatalog.mp.microsoft.com is (null)

Apr 18 14:00:00 dnsmasq[513]: config error is REFUSED

I think something is wrong somewhere...

What am I doing wrong ?

Thank you very much for your help.

Best regards,