Has anyone using pi hole tried to configure load balancing with HAProxy with success ?

I have haproxy and pi hole running on same node and other pihole on a different node !

please share

Is there a compelling reason to put two DNS servers behind an HA proxy when you can already specify multiple DNS server IPs in just about every DNS client in use these days?

how would you specify multiple dns server ip to a vpn ?

What is the layout of your scenario?

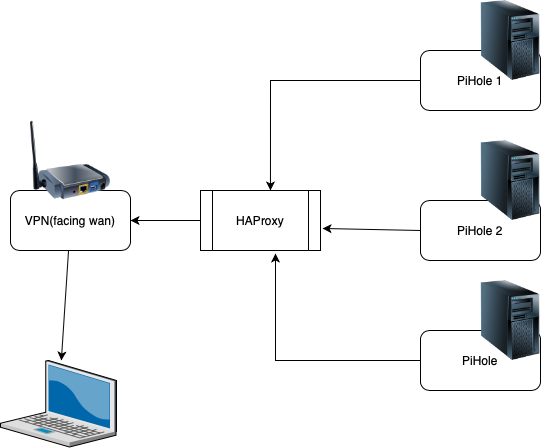

This what I can think of

global

maxconn 4096

defaults

mode http

timeout connect 5s

timeout client 50s

timeout server 50s

listen http-in

bind *:53

server dns1 192.168.0.15:5335

server dns2 192.168.0.22:5335

server dns3 192.168.0.23:5335

What is driving a need for load balancing? A single Pi-hole can handle all of your network DNS traffic. Let the clients decide which of the three to use. If they all settle on one Pi-hole, that's not a problem.

That HAProxy node doesnt look redundant at all from the drawing.

Whats the use of three DNS servers when that single HAProxy can go down resulting in no DNS at all ?

Most VPN servers allow to push multiple DNS servers to the clients via DHCP.

That allows the clients to choose which DNS server to pick.

Most clients have some kind of logic that lets them choose like who is the quickest responding.

With that last one, if one DNS server gets all the DNS queries causing more load, the others will start respond quicker because of less load.

And the clients will hop again to the quicker ones.

That way you already get some sort of load balancing if the nodes are identical.

EDIT: typos

Commonly, deploying multiple Pi-holes would aim for redundancy, not load balancing. (click for more)

You'd only introduce a load balancer if you'd expect that a server would not be able to cope with the number of observed or expected requests.

For the DNS part, even an RPi Zero is able to handle several dozens of clients with tens of thousands of requests a day without breaking a sweat (pihole-FTL has taken good care of that).

But in the event of a failure, you would lose DNS altogether, so I'd understand the desire for a redundant server.

However, as others pointed out already, implementing redundancy by introducing another single point of failure would gain you nothing but a more complicated setup (and maybe a slightly higher power bill).

Most VPNs should allow for configuring more than one DNS server, and deHakkelaar has already explained how DNS itself may alleviate the need for additional redundancy measures.

Still, there might be scenarios where you are not able to distribute two DNS servers.

FritzBox routers e.g. allow only for a single IP to be distributed as local DNS server via DHCP.

If you are facing such a situation, you may want to take a look at solutions using the Common Address Redundancy Protocol (CARP) or the Virtual Router Redundancy Protocol (VRRP).

ucarp and keepalived are among the more well known implementations. They would have to be installed on both Pi-hole machines. Availability would depend on your actual OS (AFAIK, Debian has packages for both).

Personally, I'd prefer CARP over VRRP currently, but I'd only recommend either for solitary Pi-holes:

If you run additional software next to Pi-hole on the same machine, you'd have to evaluate if that software and the clients using it would behave as expected when IPs are switched.

Both protocols do little more than switching an IP from one machine to another, so any software relying on some kind of state (session or not) will fail in that case, unless the respective software itself is prepared to take all necessary steps to run in such a scenario.

Three nodes is just enough for a proper active/passive cluster with voting, quorum, fencing, network bonding etc to play ball with Pi-hole's services, cron jobs and databases stored on a cluster FS:

With Raspberry Pi's, you'd need some sort of controlled UPS for fencing one another (STONITH) to prevent "split-brain".

EDIT: Maybe a rack controlled PDU will do the job of fencing Raspi's

Clustering is not what I would recommend for simple Pi-hole DNS redundancy.

See this post for some insights in the difference of network-oriented fail-over software like ucarp vs. the cluster-aware approach followed by software like pacemaker or heartbeat.

Especially this:

The difference is very visible in case of a dirty failure such as a split brain. A cluster-based product may very well end up with none of the nodes offering the service, to ensure that the shared resource is never corrupted by concurrent accesses. A network-oriented product may end up with the IP present on both nodes, resulting in the service being available on both of them.

The first is most likely not what you want for Pi-hole's DNS..

That was just for fun.

But above bit is what I want so whats wrong with that ?

If have the active node (the one actually running pihole-FTL) writing to the shared dbase resource, you dont want the other nodes writing to the same shared files so you deny them / take away that resource.

Doing votes by every node + the fourth vote, the quorum partition, will guaranty to a certain point your services will be up very robust.

That fencing bit is very effective if have proper system management like IBM's RSA or HP's ILO.

I once setup a 4 node cluster with all the whistles and bells of bonding, fencing, quorum partition, cluster FS on SAN etc.

Within a week I received notifications something going wrong, but all services were still up.

Turned out a colleague of mine pulled 7 CAT5 cables from my setup, not realizing the setup was live already ... but still the system held up.

If some catastrophic event occurs, your most of the times in troubles anyway regardless of what solution you apply ... Murphy's law ![]()

EDIT: ow I forgot with proper cluster heuristics you can monitor QoS and intervene automatically if necessary.

I don't think its a question of wrong or right, but rather one of preferences (and maybe simplicity).

Granted, Pi-hole as a whole is a bit of a mixed use-case (I leave it for the interested to click here for somewhat lengthy details).

The DNS part doesn't need clustering, as its simple query/response model already is atomic, and each DNS query is entirely independent from any previous.

A simple fail-over of IPs is enough to keep you afloat. The most you will lose is either an answer not received or a query not reaching its target. Both events will be completely mitigated by a client resending a query.

Similar is true for DHCP operations, but keeping lease information needs special attention.

Pi-hole's UI and its interaction with the query database are another story, and if you are using client-based filtering, this will also raise the bar.

Pi-hole itself isn't cluster-aware, meaning you have to rely on separate measures to keep your databases in sync (if that's what you want).

If you would choose to share the dbs among nodes instead, my proposal of using CARP or VRRP and just having the IP switch will corrupt your db rather sooner than later, so you have a valid use case for clustering the dbs here. But that would already require changes to how Pi-hole is accessing dbs to start with.

On the other hand, Pi-hole's query database is still fully operational when run separately on each node. Of course, that comes with the drawback that each db will only contain the queries that its node has processed.

Pi-hole's gravity configuration database will be updated uniformly as far as the blocklists are concerned, but other aspects like group management, client-based filtering and blocklist entries have to be propagated to other nodes. Fortunately, frequency of change can be expected to be low, and triggers are well-known.

I guess it'd depend on your actual need and your willingness to deal with the intricacies of your aspired solution.

If you focus on the DNS service (which I presume is the main concern for redundancy or availability respectively), ucarp or keepalived would be enough.

If you'd favour having only one database to configure and analyse queries against, then clustering may well be your thing.

If you're really keen, you'd try to separate those concerns and try to implement both.

For a start, my recommendation would be to stick with the DNS focus and go see how far that takes you.

Actually, I'd only recommend that in case you can't supply two DNS servers to your clients, and you are extremely uncomfortable with that single point of failure.

To put that into perspective, you may want to keep in mind that a router is a single point of failure in any home network anyways. That router normally takes care of DNS also.

Considering redundancy for DNS in a home network is a luxury affordable only due to low prices for devices like RPi Zeroes. ![]()

Its just a matter of how far are you willing to go to provide redundancy.

Some that I can think of:

At least two main interfaces to allow bonding;

Some other interfaces for heartbeat etc;

Two switches;

Two routers;

Two independent routes to upstream Internet;

Two power supplies on different groups;

Two UPS'es;

A "no-break" power solution connected to at least two different power grids;

Redundant local storage (RAID etc);

Two HBA's for accessing shared storage;

At least two SAN/NAS solutions for distributed shared storage;

ECC memory striped;

Some solution for fencing either via server system management, controlled UPS'es or intelligent PDU's;

And am probably forgetting a few ![]()

And I say "two" for most of the items above but for some, you need to provision threefold for unforeseen circumstances.

But from a learning point of view, the services that Pi-hole provides are not that difficult to cluster.

Its a nice project if want to get into clustering etc ![]()

That Red Hat "High Availability" link I posted previously regards a VIP that hops between nodes as just another resource that can be configured in a cluster.

EDIT: ow I forgot to mention that most hypervisor solutions also provide a fencing device for your cluster solution.