Whenever gravity is run (manual, pihole -g OR automatic, sunday run) The new gravity database is build from the blocklists and, eventually swapped to become the active one. This method was found to be the most efficient one during the v5.0 beta tests.

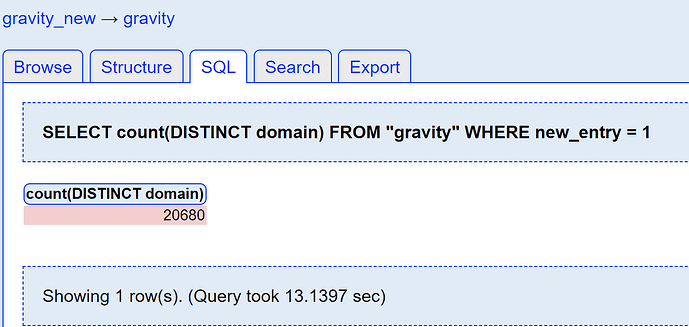

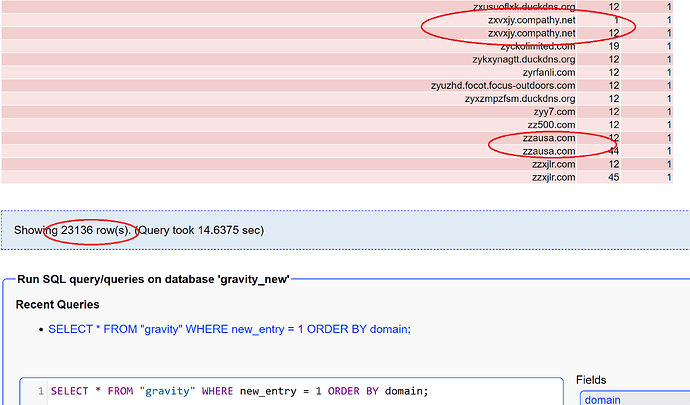

My request: Don't simply erase the old database, keep it on disk, this to allow users to determine which domains have been added / removed since the last run. It often happens something suddenly doesn't work anymore on a sunday (due to added domains from a blocklist), it is possible to find the cause, using the query log, but it's impossible to determine if it's a new domain (recently added) or it already existed (last week), unless the user starts checking all the (some compressed) pihole logs.

By having the ability to determine the blocked domain is recently (last gravity run) added, the user can get insurance / gain confidence he's doing the right thing, whitelisting the domain.

There shouldn't be a disk space problem, the old (active) and new (to be activated) databases do exist on the disk until 'swapping databases' is executed. As opposed to deleting the old one, simply rename it, and erase it at the start of a new gravity run.

This hardly change anything to the inner working of pihole, only the moment the old database is removed changes.

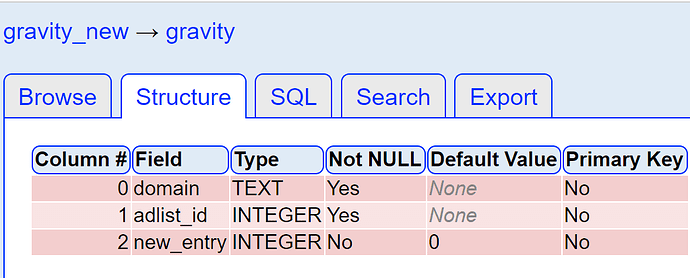

Eventually, totally optional, an additional web interface page could be added, to indicate the added / removed domains, compared to the last gravity run.