Symptoms

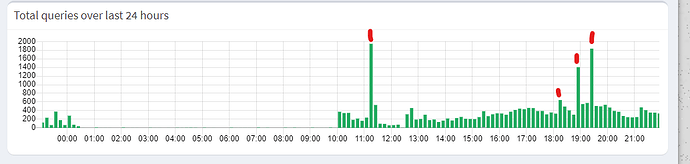

I'm experiencing unusual behaviour on a new installation of Pihole. Chome on my Windows workstation will occassionally stop working due to DNS failures. Failures are instant, and using nslookup I can confirm that Pihole is returning a "Refused" response.

Using dig on the pihole I get the same results (querying its own dnsmasq instance). However, using dig to query pihole's upstream servers directly (Cloudflare 1.1.1.1) works just fine, so this isn't an internet connection dropout.

I've tried capturing debug info but wasn't able to catch it in the middle of the latest failure. When it happens, DNS resolution is down for maybe 2-5min, then comes back again for no apparent reason.

Debug Token:

https://tricorder.pi-hole.net/w66RXrSS/

Config details

I built this pihole box intending it to replace an existing proof-of-concept install on my network. The config should be quite similar, and I copied the config from the old one using Teleporter, checking all the checkboxes during import.

It's somewhat messy, but my network has a few DHCP servers running on the same segment with non-overlapping IP pools: the Unifi USG3 gateway, the old pihole box, and the new pihole box. This setup has allowed me to test enabling pihole filtering for devices on the network without making it a single point of failure.

I would eventually like to make this new pihole the sole DHCP server and DNS resolver on the network, and then later add a second similarly-configured pihole for high availability.

The old pihole install is an RPi 4 running Raspbian, the new one is a Radxa RockPi S running Ubuntu 20.04 with Radxa's custom 4.4 kernel for the hardware. Both are attached by onboard ethernet and I have no reason to doubt their reliability.

My internet connection has native v4 and v6 support. My ISP delegates a prefix to the router and clients autoconfigure themselves. As such, I've enabled v4 and v6 upstream resolvers in Pihole.

Evidence from logs

Setting DEBUG_QUERIES=true I can see the following in pihole-FTL.log:

[2022-01-12 17:21:40.241 111183M] **** new UDP IPv4 query[A] query "mail.google.com" from eth0/192.168.1.70#37148 (ID 6726, FTL 29316, src/dnsmasq/forward.c:1601)

[2022-01-12 17:21:40.241 111183M] mail.google.com is known as not to be blocked

[2022-01-12 17:21:40.242 111183M] **** got cache reply: error is REFUSED (nowhere to forward to) (ID 6726, src/dnsmasq/rfc1035.c:1110)

[2022-01-12 17:21:40.243 111183M] EDE: network error (23)

[2022-01-12 17:21:40.243 111183M] Set reply to REFUSED (8) in src/dnsmasq_interface.c:2071

All the failures follow this same format. The corresponding log entry is pihole.log is

Jan 12 17:21:40 dnsmasq[111183]: query[A] mail.google.com from 192.168.1.70

Jan 12 17:21:40 dnsmasq[111183]: config error is REFUSED (EDE: network error)

The old pihole installation has never exhibited this behaviour. At first I thought it was a genuine upstream problem, because the old install uses OpenDNS and I'm trying Cloudflare on the new one. Changing the new pihole to use OpenDNS instead has not fixed the problem.

Hypotheses

I can't think of any reason why this would happen, particularly only on this pihole installation. The internet connection is quite stable, and this pihole hasn't been rebooted or anything recently.

The only scenario I can come up with is something like:

- A very brief transient network failure occurs

- A client requests DNS resolution during this loss of connectivity

- Pihole/dnsmasq queries all its upstream, finds it can't reach any of them, and returns REFUSED to the client

- Pihole/dnsmasq caches this failure, and continues to return REFUSED for a period of time even once the upstream network issue has cleared

- Eventually the negative-cache times out and behaviour returns to normal

However I don't think dnsmasq caches connection errors, and it wouldn't explain why the resolution failure sometimes lasts 1-2min and sometimes lasts for 5min. Also, apps with existing connections keep working fine so I'm quite convinced it's not a connectivity issue.