My current hosting situation is similar to that of many:

-

Personal Domain Management

1.1. Hosted on Azure DNS

1.2. CNAME records pointed to DuckDNS domain -

Dynamic DNS Management

2.1. Hosted on DuckDNS

2.2. CNAME requests via Azure forwarded to local IP address -

Gateway Management

3.1. Fibre ONT in bridge mode

3.2. Netgear Orbi RBK852 as router

3.3. Router configured to forward requests on port 80 and 443

3.4. Target is edge server -

Edge Management

4.1. Nginx Proxy Manager is reverse proxy hosted on edge server

4.2. Receives requests on port 80 and port 443

4.3. Forwards [subdomain].[domain] requests to appropriate local services

There are many different ways to do this and multiple hosting sites or services that can be used, but this approach is working very well for me.

Azure knows the CNAME records to forward. DuckDNS knows where roughly to send them, and the router makes sure they get to the right place. NPM then routes the requests to the correct services.

How can I best use Pi-hole DNS / dnsmasq to do this for an internal domain with subdomains?

Let's say I have the following:

External Domain: example.com

Internal Domain: example.lan

Router / Gateway: 192.168.1.1/router

Edge Server 1: 192.168.1.91/edge1

Edge Server 2: 192.168.1.92/edge2

NPM Address: http://192.168.1.91:81/ or http://edge1:81/

Pi-hole1 Address: http://192.168.1.91/8080/admin/ or http://edge1:8080/admin/

Pi-hole2 Address: http://192.168.1.92/8080/admin/ or http://edge2:8080/admin/

Local queries are resolved via DNS, which means they should be forwarded to the two Pi-hole servers.

Environment variables have been set to enable the following configurations:

01-pihole.conf

domain-needed

expand-hosts

bogus-priv

dnssec

02-pihole-dhcp.conf

domain=example.lan

local=/example.lan/

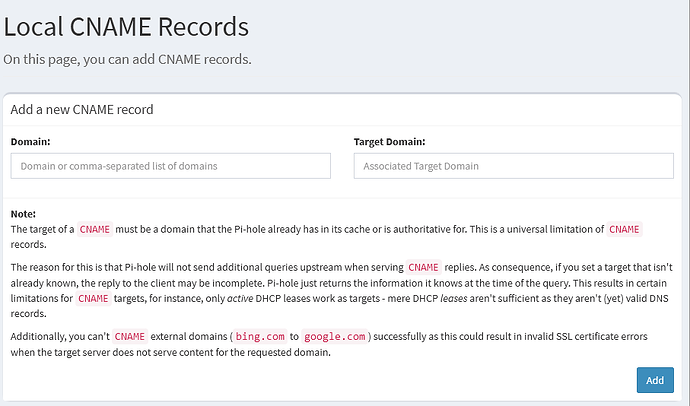

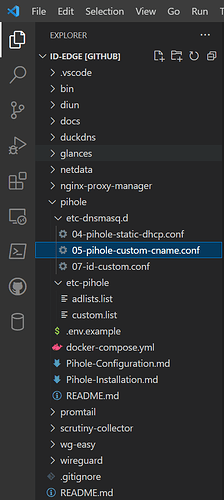

05-pihole-custom-cname.conf

cname=pihole1.example.lan,example.lan

cname=pihole2.example.lan,example.lan

cname=homeassistant.example.lan,example.lan

I have Nginx Proxy Manager configured with a proxy host like this:

- Add Proxy Host

1.1. Domain Names: pihole1.example.lan

1.2. Scheme: http

1.3. Forward Hostname / IP: edge1

1.4. Forward Port: 8080

1.5. Cache Assets: Disabled

1.6. Block Common Exploits: Disabled

1.7. Websockets Support: Enabled

I am missing something, and I don't know what it is. I have a custom configuration file in /etc/dnsmasq.d/ where I've tried the following settings:

07-custom.conf

server=/example.lan/192.168.1.91

or

address=/example.lan/192.168.1.91

But this doesn't seem to be working because the step to route the CNAME record to the reverse proxy isn't there:

$ docker exec pihole dig @192.168.1.1 pihole1.example.lan

; <<>> DiG 9.16.37-Debian <<>> @192.168.1.1 pihole1.example.lan

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 18175

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;pihole1.example.lan. IN A

;; ANSWER SECTION:

pihole1.example.lan. 0 IN CNAME example.lan.

;; Query time: 0 msec

;; SERVER: 192.168.1.1#53(192.168.1.1)

;; WHEN: Tue Mar 14 10:15:24 MDT 2023

;; MSG SIZE rcvd: 85

Has anyone done this, or is there any tutorial or explanation available?

Thank you!