Sorry for reopening a can of worms, I HAVE followed the unbound instructions and installed my own local dns, HOWEVER upon careful re-reading of the documentation, it seems that the algorithm in pi-hole, as also described in Redirecting..., is that if EVER a domain enters the pi-hole CACHE., it will NOT be blocked by new rules, no matter how frequently you update the rules (within the TTL, which you assume if somebody is evil, will be large). WHY is Pi-Hole NOT re-checking the cache for new entries in blacklists? In my opinion (no jokes about IMNSHO), just my opinion, IF for efficiency you do check the cache before the blacklists, then at least, you should PURGE from the cache, the entries to evil sites that you may have already resolved and cached in the past, but the are now blocked. Of course, this assumes that clients also purge caches frequently. In other words, if I DIG (or use my client after purging my client dns resolver flushing dns), I want NEW entries in the blacklist to work, not to be fooled by evil cached entries that might have been well behaved for a while, waiting with a huge TTL, to enter into would-be-future-victims caches, to be "whitelisted by the cache, effectively". The local cache should NOT imply automatic almost forever (until TTL) whitelisting. My 2cents... Disclaimer, I humbly ask because even if I know of the RTSL method, I want an authoritative answer from you about what you think the code SHOULD do and ACTUALLY DOES. Thanx, Caps for emphasis, but not shouting, YMMV, and other usual disclaimers apply.

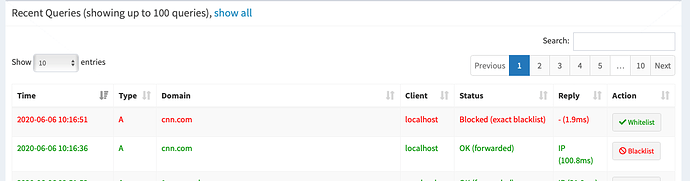

I have not reviewed the code for the Pi-hole cache, but this test indicates that a domain is immediately blocked when added to the blacklist (even with a high min-TTL specfied in unbound):

dig cnn.com

; <<>> DiG 9.11.5-P4-5.1+deb10u1-Raspbian <<>> cnn.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 56011

;; flags: qr rd ra; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;cnn.com. IN A

;; ANSWER SECTION:

cnn.com. 3600 IN A 151.101.129.67

cnn.com. 3600 IN A 151.101.1.67

cnn.com. 3600 IN A 151.101.193.67

cnn.com. 3600 IN A 151.101.65.67

;; Query time: 101 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Sat Jun 06 10:16:37 CDT 2020

;; MSG SIZE rcvd: 100

**pi@Pi-3B-DEV** : **~ $** pihole -b cnn.com

[i] Adding cnn.com to the blacklist...

[✓] Reloading DNS lists

**pi@Pi-3B-DEV** : **~ $** dig cnn.com

; <<>> DiG 9.11.5-P4-5.1+deb10u1-Raspbian <<>> cnn.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 38709

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;cnn.com. IN A

;; ANSWER SECTION:

cnn.com. 2 IN A 0.0.0.0

;; Query time: 2 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Sat Jun 06 10:16:51 CDT 2020

;; MSG SIZE rcvd: 41

Hey, if this is how it works, great. My concern, is that according to the explanation of the order of things, the code is doing what you wanted it to do - instead of as documented - thus the doc is wrong. Please fix the doc then... it's important. The whole point of pi-hole is not just to never see bad sites, but to STOP seeing bad sites, the usual loop is 1) you see a "bad" site, you either do nothing and/or report it. You want that if the "bad" site made it into the blacklist, then users are prevented to go AGAIN into that bad site. Otherwise, the strategy for "bad" sites to defeat pi-hole would be to behave well for a bit, with TTL set to as high as you can, wait until would-be-victims cache it, then you would be inmune to blacklists that - again - according to the doc I referred to, are checked ONLY if the domain is not in local cache. THANX

see: step 2 "trumps" step 3... for any entry the got into the cache before the updated blacklist was seen by pi-hole.

from Redirecting...

A standard Pi-hole installation will do it as follows:

- Your client asks the Pi-hole

Who is pi-hole.net? - Your Pi-hole will check its cache and reply if the answer is already known.

- Your Pi-hole will check the blocking lists and reply if the domain is blocked.

You are jumping to conclusions here.

The sequence of steps you quote is describing a single lookup. It is completely oblivious to what is happening when you blacklist a domain.

So the docs can be right while the observed behaviour is correct at the same time (while just like jfb, I haven't looked into the code to confirm this).

But I appreciate your observation as such, as it seems to point to Pi-hole's DNS caching documentation may be lacking in this regard.

The output I provided earlier shows that when a domain is blacklisted, it is immediately blacklisted, even with a long TTL for the domain.

If Pi-hole removes the entry from the cache (as it appears to do), then the docs are correct. The cache is checked, the entry does not exist there, then the blocklist is checked, the domain is found there.

Please run the test yourself and see if your results are different.

If I could. I would. I’m a bit busy trying to figure out how on earth the blinkforhome Amazon Link xt2 module managed to reply to queries Over its own Un-Documented open port 53 !!!

Correct

Because we flush the cache on list manipulations. Every domains will be checked thereafter again before serving any replies to the user. Even if there is still a valid record for this domain in the cache, this doesn't matter as we short-circuit the DNS query before even looking into the DNS cache when a domain is to be blocked.

We're not doing this.

May I ask who this comment is meant for?

If it is meant for me, then I don't really get it:

When it is not blocked, we look up the domain in the cache. If found there, we serve the content (no traffic upstream). If it is not in the cache, we ask upstream (traffic + reply delay is generated) and cache the result afterwards. I don't see why the reason for the cache can be void in this case.

This topic was automatically closed 21 days after the last reply. New replies are no longer allowed.