pihole checkout ftl new/CNAME_inspection_details has allowed me to identify the CNAME which was being blocked (gstaticadssl.l.google.com)

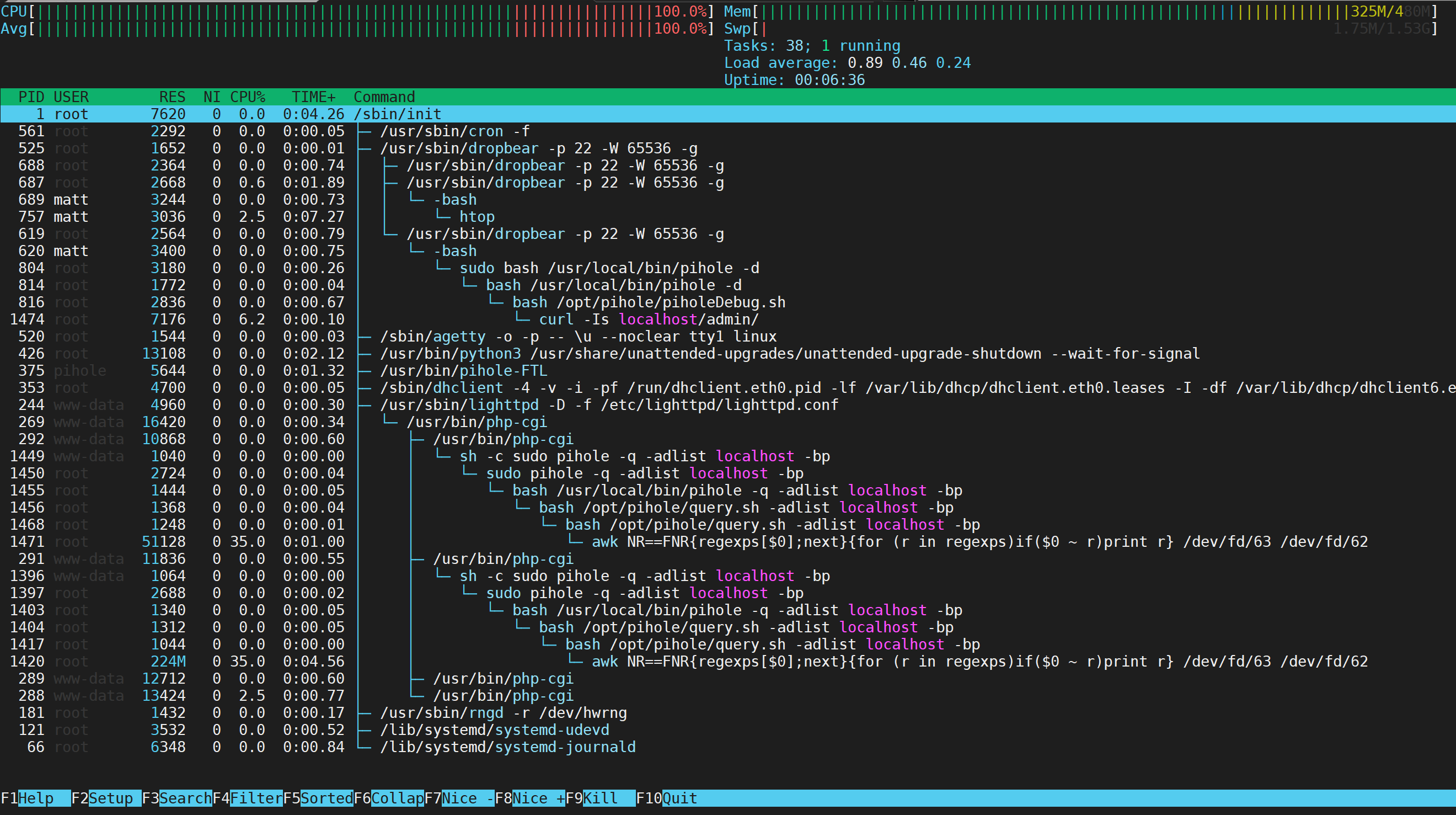

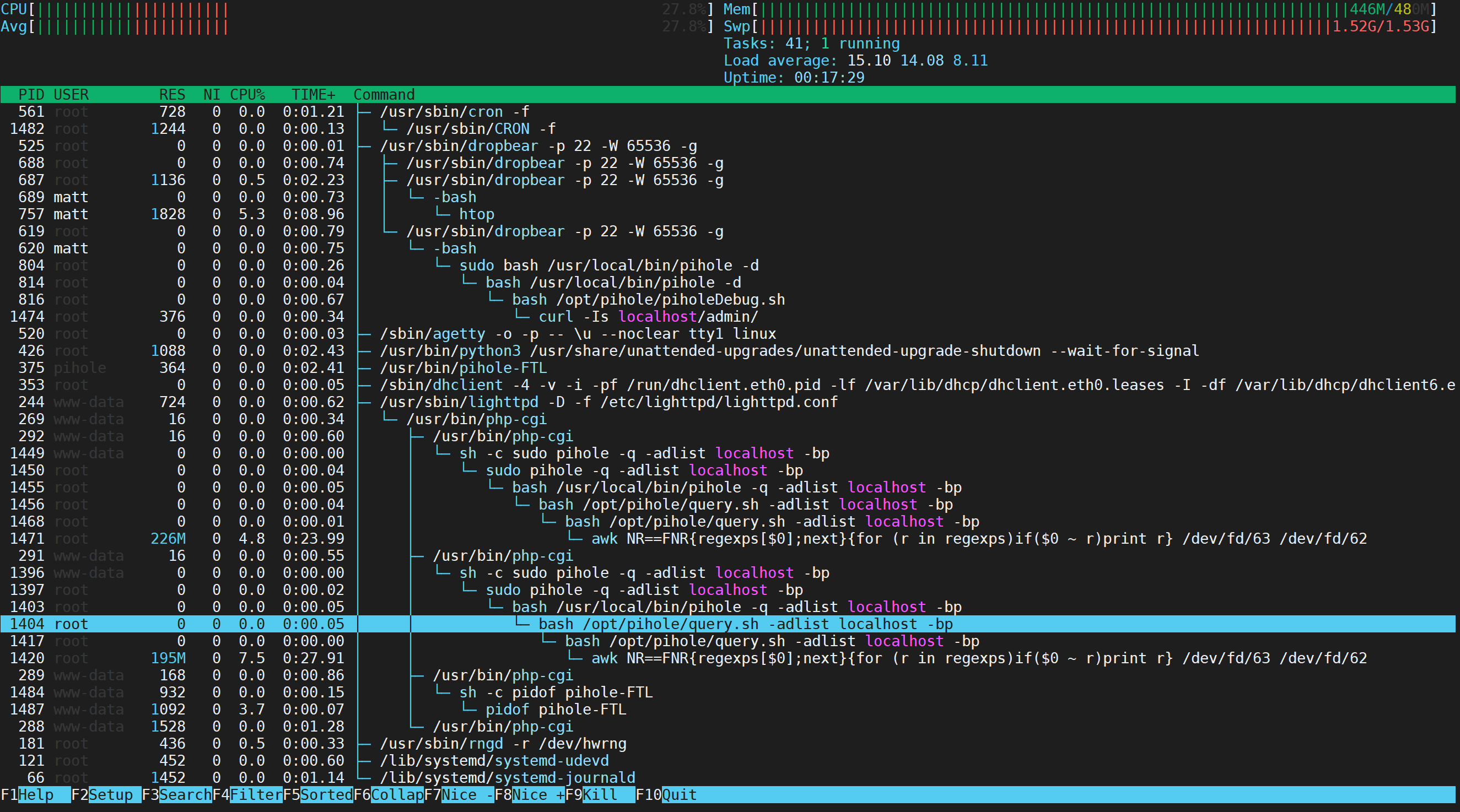

However, on the new branch, querying the lists is now SIGNIFICANTLY more resource intensive, going from ~20-30 seconds to 10+ minutes, and slowing my puny Pi Zero to a crawl in the process (load averages pushing 8.0), breaking DNS resolution during the time.

When the query finally finished, it threw out this bit of text alongside the expected list data:

/opt/pihole/query.sh: line 37: 20058 Killed awk 'NR==FNR{regexps[$0];next}{for (r in regexps)if($0 ~ r)print r}' <(echo "${lists}") <(echo "${domain}") 2> /dev/null

Not sure if that helps...