For that to work, your nginx has to correctly handle the forward to http://<2nd-pihole-host>/admin.

Note that you have to specify /admin for access via IP address.

Ah, I didn't realize the need to include "/admin" when using IP address @Bucking_Horn. And now that you mention it - it seems I never had this properly configured on my NGINX config. I think I may have shoehorned a fix by creating a bookmark that included the redirect to "/admin" - I can't remember what I did honestly. But now that I try to utilize NGINX's fwd location, it isn't working - which is good because that is to be expected.

Research

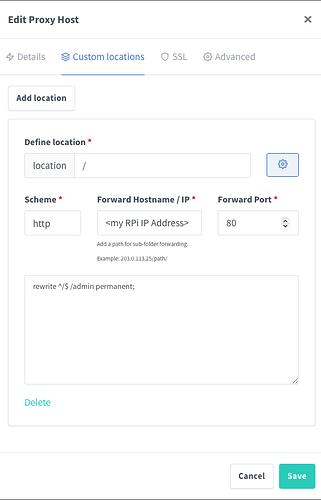

Looking at several forums/posts about this subject - like this one. It seems NGINX provides a simple fix to handle redirects for situation like this by applying a "custom location".

I applied this change, but it is still not working. I know this forum is for Pihole, but anyone who has experience with NGINX's config to accommodate redirects and can educate me what I am still doing wrong I would be very grateful.

Your debug log shows that you have enabled Conditional Forwarding to allow local resolution through your router's DNS resolver, but you haven't configured a local domain name to go along with it.

Without that detail, Pi-hole would forward a client's requests for<hostname>.<search.domain>to its public upstreams, which naturally would not know anything about your local private network.

I guess the wording on Pihole's setting page read like it was optional. But you are telling me I do need to include this?